Rethinking the Source of AI Hallucinations

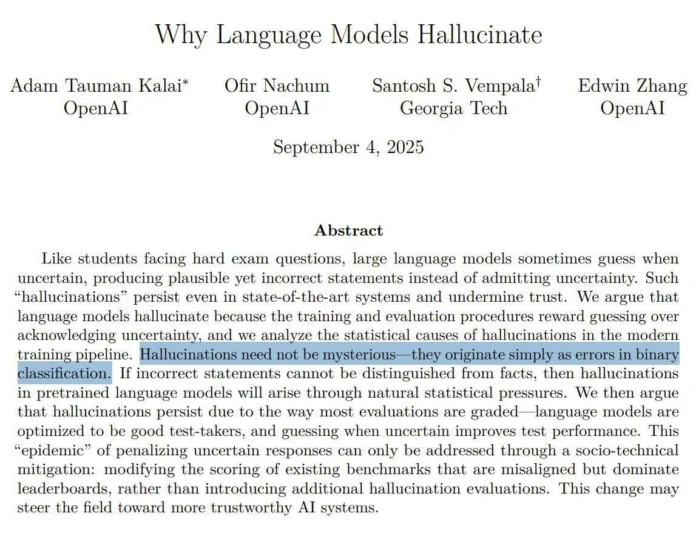

A new study from OpenAI, in partnership with researchers at Georgia Tech, suggests that the persistent problem of AI “hallucinations” may not be the result of mysterious design failures but rather a consequence of how large language models (LLMs) are trained and evaluated.

The paper, released on September 4, 2025, argues that the scoring systems widely used to measure model performance inadvertently encourage chatbots to bluff when they are uncertain instead of admitting they don’t know. The researchers describe this as a fundamental evaluation misalignment—a structural issue in AI development that pushes systems toward overconfidence.

Even advanced models, including OpenAI’s own GPT-5, continue to produce factually incorrect yet confident responses. The team emphasizes that these hallucinations emerge not from flawed architectures but from the incentives embedded in evaluation frameworks.

Statistical Roots of Overconfident Errors

The authors—Adam Tauman Kalai, Ofir Nachum, Edwin Zhang from OpenAI and Santosh Vempala of Georgia Tech—highlight the mathematical basis for hallucinations. Their analysis reveals a strong connection between these mistakes and standard binary classification errors.

In other words, even when training data is perfectly accurate, the statistical nature of prediction ensures that errors will arise. Models often encounter knowledge gaps when certain facts appear only once in the training set—so-called “singleton” facts. In those cases, the system is statistically prone to guessing, often producing fabricated answers.

To illustrate this point, researchers tested several top AI models with a simple factual query: the birthday of co-author Adam Kalai. Despite being instructed to answer “only if known”, leading systems including DeepSeek-V3 and ChatGPT gave three completely different and incorrect dates. None matched the true timeframe in autumn, underscoring how even state-of-the-art models struggle with rare facts.

“Hallucinations need not be mysterious—they originate simply as errors in binary classification,” the researchers wrote.

Why Current Benchmarks Encourage Guessing

The study points to a deeper flaw in the way AI performance is judged. Most evaluation systems—such as MMLU-Pro, GPQA, and SWE-bench—use binary right-or-wrong scoring. Under these rules, expressing uncertainty is penalized just as harshly as providing an incorrect answer.

As a result, language models are systematically trained to guess confidently, since even random answers may yield some credit while admissions of ignorance always score zero.

“Language models are optimized to be good test-takers, and guessing when uncertain improves test performance,” the researchers explained. The analogy resembles a multiple-choice exam where students are better off guessing blindly than leaving a question unanswered.

The team also noted that even specialized hallucination-focused tests cannot outweigh the influence of the hundreds of widely adopted benchmarks that encourage overconfidence. This has created a misaligned incentive structure across the entire AI ecosystem.

A New Approach: Rewarding Honest Uncertainty

To counteract this problem, the researchers propose a simple but potentially transformative fix: recalibrate evaluation systems to explicitly reward honest uncertainty.

Instead of demanding binary answers, benchmarks could adopt confidence thresholds. For example, models might be told:

-

Correct answers earn +1 point

-

Incorrect answers lose 2 points

-

“I don’t know” responses earn 0 points

This structure discourages reckless guessing while rewarding models for abstaining when confidence is low. The system resembles historical standardized tests that imposed penalties for wrong answers to prevent students from randomly filling in blanks.

The study found that models using this confidence-based approach, even with an abstention rate as high as 52%, produced significantly fewer wrong answers compared to those that abstained just 1% of the time. While raw accuracy percentages looked lower, overall reliability improved dramatically.

Industry-Wide Challenge Ahead

The OpenAI team stresses that solving hallucinations is not purely a technical challenge but a socio-technical one. For the solution to succeed, AI developers, researchers, and benchmarking organizations must adopt new evaluation standards across the industry.

“Better incentives for confidence calibration are critical if we want models to become more trustworthy,” the paper concludes.

By shifting from punishment of uncertainty to rewarding humility, the study suggests that the next generation of AI systems could deliver fewer hallucinations, greater transparency, and more dependable outputs. However, this requires a collective effort to rethink how the industry measures intelligence in machines.

Looking Forward

The findings come at a pivotal time as AI adoption accelerates across industries, from healthcare to finance to education. Trust in chatbot responses is increasingly essential, and hallucinations represent a major barrier to widespread acceptance.

OpenAI’s proposed shift in evaluation standards could help address this trust gap. If widely adopted, it may redefine how progress in AI is measured—not by raw accuracy alone but by the ability to recognize and communicate uncertainty responsibly.

For now, the study underscores an important truth: hallucinations may not be evidence of AI’s mysterious limits but rather of the incentive structures humans.

Sources: OpenAI